Over the last year there has been a prevailing sentiment that while AI will not necessarily be replacing humans, humans who use AI will replace those that don’t.

This sentiment also applies to the next era of cybersecurity, which has been rapidly unfolding over the last year. Recent breakthroughs in generative AI hold enormous promise for modern defenders. Amid the dual pressures of accelerating attacks — down to just over two minutes, in some instances — and persistent skills shortages, generative AI has the potential to be not just an accelerator, but a veritable force-multiplier for teams of all sizes and maturity levels. We’ve seen these impressive gains firsthand working with early adopters of Charlotte AI (made generally available last month), with users reporting speed gains of up to 75% across supported workflows.

Making humans as effective and efficient as possible begins with giving them the best tools for the job. Today’s AI landscape presents organizations with a rapidly growing and often dizzying landscape of foundational models developed by the open-source community, startups and large enterprises. Each model is unique in its strengths and applications, varying in speed, accuracy, training data, computational intensiveness and in the underlying risks they pose to end-users. Invariably, selecting just one model, or one family of models, can force users to accept trade-offs across any one of these variables.

Security teams shouldn’t have to compromise on the tools they use to protect their organizations. In an ideal world, their tools should support the maximum levels of speed and accuracy required across the myriad workflows they oversee, without trade-offs on performance and risk — and without placing the burden on defenders to calculate computational complexity.

This is one of the foundational principles on which we’ve designed Charlotte AI. To optimize Charlotte AI’s performance and minimize the drawbacks of using individual models, we’ve architected Charlotte AI with a multi-AI system; one that partitions workflows into discrete sub-tasks and enables our data scientists to isolate, test and compare how effectively different models perform across tasks. This approach enables our experts to dynamically interchange the foundational models applied across workflows, ensuring end-users can interact with an ever-improving AI assistant fueled by the industry’s latest generative AI technologies.

Charlotte AI’s multi-AI design is singular across the cybersecurity landscape, applying cutting-edge system design from the front lines of genAI research to CrowdStrike’s unsurpassed data moat of award-winning threat intelligence, cross-domain platform telemetry and over a decade of expert-labeled security telemetry. In this blog, we shed light on how it comes together.

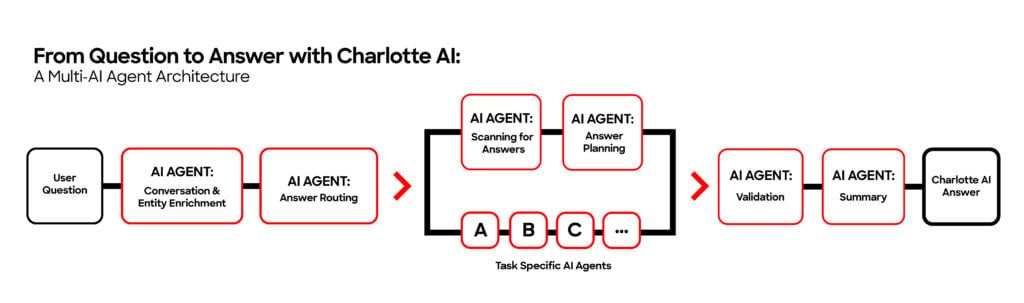

Under the Hood: From Question to Answer with AI Agents

Charlotte AI enables users to unleash the transformative power of generative AI across security workflows. With a simple question, users can activate Charlotte AI to answer questions about their environments, generate scripts or analyze emerging threat intelligence; all grounded in the high-fidelity telemetry of the Falcon platform. Charlotte AI’s natural processing capabilities lower the level of skill and experience needed to make quick, accurate security decisions, while enabling even seasoned analysts to unlock incremental speed gains across every stage of their workflows — from surfacing time-sensitive detections, to investigating incidents to taking action with Real Time Response.

Under the hood, Charlotte AI orchestrates over a dozen task-oriented “AI agents” to interpret a user’s question, plan the steps required to assemble a complete answer and structure the end result (Figure 1). Each AI agent is a subsystem consisting of a model and surrounding code that enables it to perform specific tasks and interact with other agents. One can think of each AI agent’s LLM (or other class of underlying model) as its “brain,” and each agent’s unique functionality (enabled by its surrounding code) as the skills that enable it to execute specific tasks.

We can think of these AI agents much like the team of doctors working in concert in an operating theater, each overseeing specialized tasks; from administering anesthesia to operating on acute areas of focus. Similarly, each AI agent has a specific responsibility and area of expertise. Much like an operation that requires a team of specialists to collaborate, Charlotte AI’s dynamic task force of AI agents work together to support a growing number of workflows; from summarizing threat intelligence, to writing queries in CrowdStrike Query Language (CQL), to assisting incident investigations.

- Step 1: Understand the Question: Charlotte AI first activates AI agents tasked with understanding the user’s conversation context and extracting entities referenced in the question — such as threat actors, vulnerabilities or host features.

- Step 2: Route Subtasks to AI Agents: Charlotte AI then activates a router agent, which determines which AI agent or agents to assign the user’s request.

- Step 3a: Scan for Answers: If a user asks a question that requires data from one or more API calls, the request is passed to a dedicated agent within Charlotte AI that ensures the information is retrieved and available for further processing.

- Step 3b: Plan Responses for Questions: If the user’s question doesn’t map to one or more API calls — for example, when asking Charlotte AI to generate a CQL query — Charlotte AI’s router agent can activate a number of other AI agents fine-tuned to accomplish specific tasks.

- Step 4: Validate the Plan and Retrieved Data: The runtime agent executes the API calls outlined by the prior AI agent. The output of this process is then reviewed by a validation agent, which determines whether the resulting information is complete or requires additional information. This AI agent may even issue a warning to the end user if the answer is incomplete.

- Step 5: Generate an Answer: A final AI agent structures the response to the user’s question, taking into account intuitive ways of presenting information to the end user and generating a summary of information presented.

Guardrails against LLM Overexposure

Systems that give users direct visibility to the output of LLMs (often referred to as “naked LLMs”) risk exposing users to inaccurate information when LLMs perform unexpectedly or hallucinate — a phenomenon where LLMs provide information that is not supported by, or even contradicts, source data. Inaccurate information can have devastating implications in security, ranging from impeded productivity, to a weakened security posture, to a major breach.

Charlotte AI’s multi-AI architecture plays a critical role in enabling a safe user experience, providing buffers that insulate end-users from the direct output of LLMs. First, by having the flexibility to apply diverse models across workflows, Charlotte AI enables CrowdStrike’s data science team to limit the ripple effects of unexpected changes in performance stemming from any one model. Another way Charlotte AI buffers users against direct LLM exposure is by using an agent tasked with validating answers before they are presented to end-users, verifying that answers are both consistent with the type of result the user is expecting and grounded in Falcon platform data.

Turbocharging Security Workflows: From Answer to Action

As large language models reach new levels of maturity and commoditization, security teams face a growing landscape of conversational AI assistants. Charlotte AI’s multi-AI architecture enables users to tap into the power of today’s best-of-breed foundational models and cutting-edge innovations across their workflows while minimizing the trade-offs of limiting their selection to any one model or model family. This architectural adaptability enables Charlotte AI to continuously elevate every analyst to new heights of efficiency, equipping them with the insight they need to make faster, more accurate decisions and reclaim a speed advantage against modern adversaries. For a deeper look at Charlotte AI’s architecture, download the white paper: “The Best AI for the Job: Inside Charlotte AI's Multi-AI Architecture.”

Next Steps:

- Download the white paper: Learn more about Charlotte AI’s multi-AI architecture.

- Register for the Unstoppable Innovations Series: Hear how early adopters of Charlotte AI are turning hours of work into just minutes or even seconds. Register for the series.

- Learn more: Visit the Charlotte AI product page.

![Qatar’s Commercial Bank Chooses CrowdStrike Falcon®: A Partnership Based on Trust [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeincstage/Edward-Gonam-Qatar-Blog2-1)